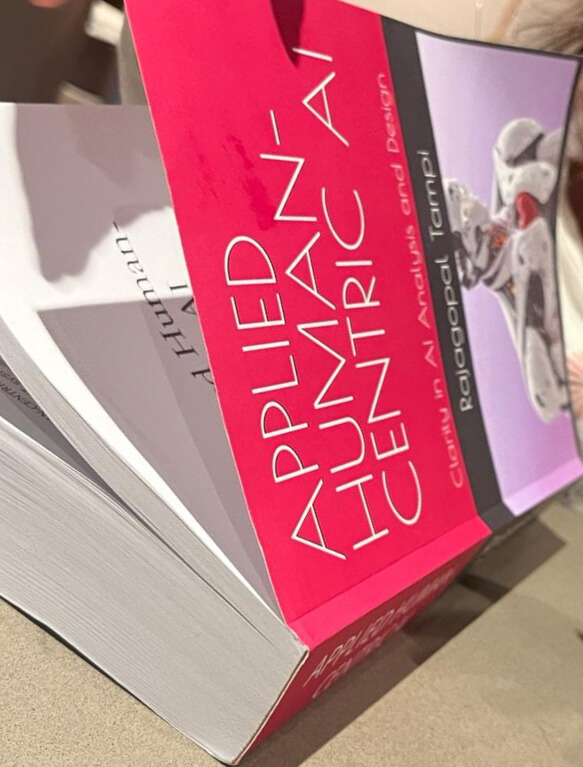

My book ‘Applied Human-Centric AI’ puts the human-being and the resources needed for our survival at the centre of the AI/ML analysis and design process.

We want AI to be useful to us and would like AI to start with doing our grunt work. We do not want it to replace human thinking, choice, free will or disturb our peaceful existence. Yet, as we have seen, the growth of AI around us, has been happening haphazardly with deepfakes, AI image creation etc. by misguided individuals and actors leading to human and political turmoil. Particularly when regulations are not available, ‘Laissez-Faire’ does not work for AI because it can harm innocent people. My book provides the tools to understand these issues during analysis and design and explains the remedial measures and processes to avoid these harmful aspects of AI. Below are some excerpts from my book:

Loss of human control to AI systems is not a single event, but it is a continuum. The more the HDRs that are implemented in AI, the closer we move to higher levels of LHC. Absolute loss of control within the riskiest AI use-cases can imply loss of life/lives and can be considered an event. Leading to this event, are gradations of loss of human control, which span loss of thought control, preference control……

….to arrive at the choice of the next appropriate action to be performed. This complexity management, for making the correct choice is at the heart of the problem that needs to be ultimately solved by AI systems. Specifically, filtering inputs, thoughts, alternatives, and choosing the appropriate decision through learning, that a human being would normally choose, in a specific circumstance, in a real time manner.

The risk categorisation methodology used in the human centric AIDP model is based on the risk/harm that defects/bugs can inflict on humans and the planet. This classification is explained….

The objective of this book is to provide a well-researched techno-ethical-engineering model to programmers, designers, and managers to navigate the complexities and fuzziness introduced by ethics, privacy, bias and such soft aspects into AI analysis, design and development. In the future, the industry will no doubt be required to provide clarity on these aspects of AI. The responsibility will then fall on the shoulders of programmers, designers and managers of the companies creating AI systems…..

Four core focus areas of the company developing AI products and services should be Traceability, Explainability, Verifiability and Validation (TEVV). These four factors are among the core components built into AIDP model and can become the differentiators….

It is to handle such cases as the two mentioned above amongst others types of errors, that the Feature Certification testing (FCT) process has been devised. Phase-End Model Synchronisation (PEMS) process ensures that code change initiated bugs in data and data change-initiated bugs in code are eliminated.

The AIDP model calls this the “Subservience” principle. Subservience design will ensure that control is handed back to the human being for human oversight in the case of an “edge case” happening.

The e-book can be purchased from Amazon Kindle Store using a browser by searching for the name of the book. Paperback version is also available for purchase. Hope you enjoy reading!

Follow link