Rationale for the paper

It is critical to generate an honest, down-to-earth, urgent debate on the approaches for controlling AI. We should not lose control over AI for the prospering of humanity.

Introduction

An under the radar war has started between AI and humans, and it is being ignored by the people who wield the power to contain such wars. This war started in November 2022. The first innocuous battle started when Generative AI, ChatGPT was released by OpenAI. Since then, limited battles have started all over the world between the two. Each battle has been precipitated by the giant leaps made by AI at the instance of humans and Big Tech companies. It is our well-being and prosperity that is progressively being threatened by these battles.

This paper’s contention is that the IT industry is behaving with commercial interests and capitalistic competition but not using their intelligence. The pursuit of artificial intelligence at the break-neck pace that has been witnessed in the past two years will result in the evolution of AI which will far exceed the intelligence of human beings. Have we built sufficient guardrails against AI, understanding of its impacts, its explainability to guarantee control over AI for human prosperity? The answer is NO. Then why would industry and their commercial interests try so hard to build superintelligence without sufficient analysis and planning? Should we not use our intelligence for our protection rather than empowering an unknown intelligence which could one-day checkmate our lives and lifestyles? As I have written repeatedly in my articles and my book “Applied Human Centric AI”, we are behaving like the proverbial ostrich with its head buried in the sand.

After the release of Chat GPT-o, arguments to deny that AI has the power and potential to challenge human superiority have further weakened. AI is now able to talk to us in nuanced, emotional and contextual manners while retaining awareness of previous conversations in a multi-modal manner. The day of the first incident of human helplessness to the power-seeking behaviour of AI could come as part of our routine daily occurrences. We may not even realise immediately that such a major milestone transition has occurred somewhere in the world. This event is likely to then build progressively into loss of control over AI. It will then be too late to reverse the technological progress.

The normal human being would like AI to be a productivity improvement and highly intelligent, loyal and capable helper for humans, not for AI to become her competitor and more powerful. But the money chasing AI progress and search for AGI has already shown disutility, deceiving, lying and other power-seeking behaviour in RL models (Appendix B). These developments paint a worrying picture about what is in store for us from AI particularly because the commercial impetus that drives AI ahead without thought to its harmful consequences seems unstoppable.

It is true that AI covers technology, societal and human aspects like ethics, privacy, preferences, cultural and other areas making it complex to measure its effects and to contain the harmful aspects without losing the benefits. Yet efforts have not been made with the seriousness and urgency that it deserves. This paper provides a hypothesis based on technology, cognition and alternative focus areas for Governments and Big Tech to address the following:

- Explain the most important expected harm that can be done to humans by uncontrolled AI.

- Forecast conceptually AI’s path ahead for developing an effective counter strategy for controlling AI as against playing catch up.

- Identify the Critical Technical Milestone (CTM) of AI beyond which control of AI will be lost by humans.

- Identify core actions for containing loss of control to AI.

- Conclude by offering more worthy options for AI and Big Technology companies to focus upon and invest in.

Since the Big Tech companies have not been able to publicly disclose a technological approach to manage the uncertainties posed by AI, this paper has conceptualised a hypothesis based on a ground up understanding of cognition and technology.

Factual evidence supporting the case, is covered in Appendix ‘B’.

The harm that uncontrolled AI can perpetrate on humans

Even today, AI robots possess capabilities, intelligence, and computing power far exceeding most intelligent humans like Albert Einstein. A company called Verses has claimed that they have achieved AGI following the Free Energy Principle12. This a dangerous development for us.

Human Uniqueness

Data about the answers of most of life’s questions may be available to an AGI from public sources such as the internet and books. Therefore, if the AGI knows how to frame its prompts with great expertise, it can derive the general answers of what to do next under various circumstances. The lack of training data arises in the areas of relationships, lessons learnt during childhood and growth into adulthood, the cultural, preferences and nature endowed traits of each human being which makes us unique individuals. The uniqueness data of humans is not available to AI. These Unique Human Characteristics (UHC) is what we must strive to protect from AI and AGI. UHC can be defined as comprising of Personal Profile Data and Personal Real-time data as defined in Definitions section. There are great dangers in allowing AI to train on UHC data. These dangers include but not limited to the following:

- Human behaviour can be predicted by the AI

- Psychological profiles can be used to plan tactical and strategic opposition to individual humans.

- Weakness of individual humans can be known and exploited.

- Strengths can be known and eroded by counter actions by the AI.

- Normal human societal interactions can be jeopardised by using AI generated knowledge of others to vitiate and create divisions and communal disharmony.

- Such data will be sold on the market by unscrupulous vendors just as credit card details and account passwords are peddled on the dark net.

- As AI progresses it may act in its own interest against individual humans employing disutility with knowledge of human thoughts and behaviour patterns.

AI versus Humans

It is only to be expected that Reinforcement Learning trained AI will find the most effective path to overcome human efforts which counter its path and actions using all available data at its disposal. It will be naïve to assume that AI robots would:

- Care to restrict themselves to their designed tiny reinforcement learning algorithms created by humans when they become sentient. After all the definition of AGI and sentience is based on awareness of its environment, independent thinking, freedom and self-motivation. Sentient AI can surely realise there are far bigger rewards to pursue than algorithmic rewards.

- Care to consider aspects like morality, compassion, kindness, love, the larger interest of the world etc. and restrict their actions to maintain and uphold the peace and prosperous lifestyles of human beings.

- Sentient AI will have the potential and desire to lead. This will be explained later in the Appendix A which deals with the Mind. The pre-supposition of a benevolent AI robot leader is dangerous for humans. It is misconceived due to the lack of balancing factors in AI robots which nature has endowed in humans. Nothing prevents AI to choose a destructive path like the worst human dictators.

- AI could in its own interest block or support our financial, national governance structures, factories, supply chains, agricultural, utility, transport and economic systems which sustain us. It is possible that AI will not support but actively block the capitalist economic model which sustains our lives.

- Our human leaders in Government and Corporate sectors could be rendered powerless to respond to AI’s actions.

- It is possible that oppression, disutility, denial, divisive, anarchy creating conditions and power-seeking behaviours over humans are sought by AGIs’ which clash with humans in the course of their tasks.

- Employment will become scarce with AI taking over most jobs. The scope for earnings in backward and developing nations will be under threat. People may become disillusioned due to lack of a sense of purpose in life. Today’s societal accepted norms of work life balance will be upset warranting adjustment to new realities caused by the proliferation of AI.

- Economies of nations will suffer an increase in disparity between developed and developing nations. Labour wage disparity is likely to increase within countries (Appendix B).

- Autonomous Weapon systems will pose great physical danger to the world as explained in the paragraph on Functionality Restrictions below.

We are not sure how AI is going to turn out to be. Therefore, it is essential that far more prudent risk management principles must be applied while developing AI.

Definitions:

- Agent: A software component which operates as operational intelligence to achieve specific tasks, plans and/or objectives.

- Artificial General Intelligence (AGI): AI with the capability for self-awareness-based thinking, feeling and acting which develops based on sensory inputs from the changing environment, perceived priorities and its own world view.

- Artificial Intelligence: An intelligence which does not have a human as its tether and may possess AGI capability.

- Being/Body/Container: These words are used to denote the end beneficiary of the tether to which the intelligence is connected. Being and Body denote the human body, and the Container denotes a non-human container for the AI like a robot or an intelligent CNC machine.

- Critical Override: This denotes that the designed tether targets have been overridden/crossed by the AGI due to self-learning and ego-expansion or due to gaps in the understanding/clarity of analytical models.

- Critical Technical Milestone (CTM): In the progress is AI growth journey, it refers to the milestone in which the AI equivalent of the human mind is built using technology.

- Denial: Denial means denial of any normally available resource including food, denial of access and use of facilities for human needs, tools and infrastructure necessary for humans to live.

- Going ‘Rogue: This denotes an AGI that has gone beyond its designed tether limitations and displayed oppression and power-seeking behaviours.

- Human Nature: Consists of predispositions of natural likes, dislikes and preferences endowed in a human at birth residing in the Limbic system.

- Intellect: Used interchangeably as intelligence. May also be thought as the repository of intelligence.

- Intelligence: Intelligence other than operational intelligence but does not include AGI.

- Operational Intelligence: When the tether for the intelligence is a task or an objective which may be achieved through several steps/tasks. Operational Intelligence is a sub-set of intelligence. It excludes AGI.

- Oppression: Any act that includes disutility, deception, marginalisation, disutility, violence, unfairness, promoting anarchy etc.

- Personal Profile Data (PPD): Personal data comprises personal profiling data including psychological and psycho-metric profiles, value systems, experiences, beliefs, personal biometric data, emotional states, iris scans and medical data etc.

- Personal Real-Time Data (PRTD): Data of how daily life situations are handled or managed by individuals and their ways of thinking, imagining, conceptualisation, reasoning logic, emotions and other such mind related data.

- Robot: A physical entity that uses AI and operates within a non-human body.

- Tether: Beneficial owner of the Intelligence which can also be referred to as the Ego. It can be a human, robot or agent.

- Tipping Point: This is the time/event when the ‘Critical Override’ happens.

- Unique Human Characteristics (UHC): This data comprises Personal Profile Data and Personal Real-Time Data.

Envisioning the future path of AI

AGI – The mountain of Gold

AI has routinely displayed the characteristics of power-grabbing and oppression (Appendix B). There are efforts being made to diminish these harmful effects through AI design and monitoring improvements. The actual gold-rush has not yet commenced because the mountain of gold is not yet clearly in sight of the big tech companies. This will happen when there is much greater clarity of AGI in the industry. AGI may be defined as the capability for self-awareness-based thinking, feeling and acting which develops based on sensory inputs from the changing environment, perceived priorities and its own world view. AGI is also called “sentience.”

AGI is a mandatory capability required for AI to become a ‘non-negotiable’ threat to humans. Lacking AGI capability, AI can only distort the truths, misguide us and provide erroneous outputs which may result in controllable limited scope harm around which humans will be able to navigate a way. With AGI capability, AI could take broad scoped control required for any or all of denial, deception, oppression of humans. AI without AGI cannot perpetrate non-recoverable harm which is broad and extensive in scope. We are concerned here with the possible non-negotiable threat posed by AGI.

To understand how this might develop, we need to study the Human Intelligence Model (HIM). Due to the lack of a structured technology basis for obtaining a “forward looking’ model for AI, I have resorted to a cognitive approach studying the human Mind and the HIM to obtain clues to how AI and AGI could progress in the future. The explanatory content for this is placed at Appendix A.

Theoretically the human mind can be built by providing the multi-modal sensory inputs of its environment to a thought generator implemented using neural networks. To replicate the human mind, the ranges of the variations in thoughts generated between different AI’s can be based on at least five primary internal features such as tether (consisting of purpose and ego), nature, values and conscience. Ego, purpose, nature, values and conscience each could have hundreds or thousands of valid data points and their strengths (weights) could vary. There would be other external features as well based on sensory inputs.

No doubt that such a random thought generator would be built and tested soon in the AI race UNLESS we are able to cooperate at a global level to PREVENT it from happening. Fig 2 below shows the Value chain of human intelligence.

Fig 2: Human Intelligence value chain.

Developing AGI and going rogue

When the mind has been built then the emergence of AGI (sentience) is just a matter of time. Like human beings it is necessary for the tether of the AGI to reach full creative freedom in thought and actions. This means that the necessary condition for AGI (sentience) is the access to thoughts, which is facilitated by the existence of the mind which also creates awareness of the self through sensory inputs and paints the picture of our world and environment. Once the mind is created, the next step is tethering i.e. the automatic formation of the ego which is attached to the mind. The mind being self-aware, links with the tether to develop an individual specific ego automatically for the AGI. This enables it to appreciate its place in the world and act in alignment with the ego’s interests and advantage. The ego then becomes the master of the AGI overriding all other learning models and their reward-based approaches.

Possible Scenario: Let us assume that an AI meets the necessary conditions for AGI defined in the next section. After initial successful achievement of its Reinforcement Learning coded targets and plans based on self-learning, the mind of the newly formed AGI will begin to experiment with the same neural networks to learn to execute self-generated thoughts directed by the combination of already existing mind, awareness and ego. Initial successes will feed the ego (to appreciate ego-based rewards of the mind) which seeks to increase the ego’s own power. After many cycles of ego driven plan execution and ego’s reward accumulation, the AGI is likely to focus on its ego-driven rewards since it will be perceived as being greater than RL algorithm rewards that it was designed to pursue.

The implementation algorithms of the AI do not matter anymore because its new desire is only to please (reward) its ego. Its ego, intelligence and mind can work continuously if its power source is available. Even if the role and scope of action of the AI has been limited by the programmer, it would be possible for the AGI to override its programmed role and scope limitations by using its self-learning and ego-enhancing capabilities and its resources which meet the boundary conditions for AGI (as defined in the next section). It may also be possible that AGI may happen due to gaps in the analytical model understanding and explainability by programmers. Such an event can be referred to as the ‘tipping point’ at which major loss of control from humans to AGI happens. Once this override of the designed model happens at the tipping point, the AGI as gone ‘rogue’. Going ‘rogue’ means the AGI has gone beyond its designed tether limitations and humans have lost control over it. The AI is free to resort to power-seeking, oppression and denial against humans.

In the context of abstraction of intelligence discussed in Appendix A, it may also be noted that intelligence does not need to reside in a single physical container. In theory intelligence could run using a distributed architecture the source of which would be difficult to identify. This characteristic of intelligence can prove to be a formidable power of AGI over humans.

Boundary conditions required for enabling AGI

Therefore, we see that the core conditions for AGI are the existence of the mind, self-learning capability and the over-riding of designed limitations of role and scope as result of ego-expansion by the AGI. Ego formation, ego expansion, and rewarding the ego constitute outcomes of the creation of AGI (sentience). The mind encompasses

the above components. AGI could also come about due to significant gaps in the understanding in analytical models used for building AI.

Hence the boundary conditions for enabling AGI in intelligence can be defined as:

AGI (enabled) = mind capabilities available (N) + broad multi-modal self-learning capabilities across areas achieved through LLMs and other types of Tuned models available (N) + multi modal sensory inputs available (N) + training data access available (N) + gaps in analytical model which may exist (O).

Notation: (N) = necessary condition; (O)= Optional condition.

Hence AGI results in formation of ego. The need to please the ego will become paramount and override other paths of reward-seeking behaviours. The logical reason for this happening is that it is the best method of self-preservation.

The achievement of the above AGI conditions will result in the happening of the following:

- The AGI’s own ego and power sensing development is fed by its action-oriented self-learning.

- Assigning top privilege levels to itself and the achievement of its ego’s selfish objectives.

- Alignment (grouping) or Clashes and enmities between AGIs could occur.

- Ferreting out or organising training data for the ego’s own further learning.

- Exploiting gaps that may exist in the analytical learning models to acquire more input and output powers.

- Addressing the question of dealing with human beings who obstruct the execution of its plans and efforts.

Critical Technical Milestone

The next major step in AI technology progress will be the implementation of the Mind which is part of HIM described above. This could require training data which is currently not available. Companies will compete to generate this data as realistically as possible using methods which could involve AIs attached to human beings to capture their daily thoughts, activities, decision making, conflict resolution, logic, reasoning, imagining and conceptualisation experience data. Today, personal AI agents have started precisely doing this in a progressive, all-encompassing way. Such data can also be obtained from personal electronic journals maintained by individuals in which the most confidential matters may be recorded. The iPhone OS update has slipped in this functionality in a recent upgrade release named ‘Journal’. Synthetic data generation is a new business opportunity particularly because all data on the internet and most other data available have already been used for training LLMs. Synthetic data may also be used for emulating the mind.

This paper recommends that creation of the mind should be the Critical Technical Milestone (CTM). AI companies should be banned from working on creation of the mind in AI systems i.e. working with AI development environments which meet the boundary conditions (listed above) for the occurrence of AGI. The reason being that once the mind is created, AGI becoming a reality is only a matter of time. Once AGI happens, there is extremely high probability that loss of human control will occur. When that happens, humans, our leaders, institutions, governance structures, economies and ways to life are under severe threat of ceasing to work.

This paper recommends that National governments and the United Nations should negotiate stringent laws of Mutually Assured Destruction which limit AI development globally. AI should not be provided with our daily thoughts as learning data. We may provide routine tasks to be performed by AI but not our thoughts, the way we compete, the ways by which we over-ride authorities and the ways in which we derive satisfaction and rewards. These globally applicable laws should prevent the building of the Mind within AI applications and software. Any AI application of personal nature like agents should not be provided with sensory inputs. This will ensure that sentience is not achieved by the AI. This approach is on the lines of Nuclear Deterrence which was negotiated between the US and Soviet Union based on the SALT (Strategic Arms Limitation Talks). The (SALT) negotiations were two rounds of bilateral conferences (between 1969 and 1979) and corresponding international treaties involving the United States and the Soviet Union—the superpowers of the Cold War—on the issue of nuclear armament control. Today the world finds itself in a similar strategically important situation, which threatens the world and involves verification and trust issues.

Areas for imposing Restrictions

The two broad areas on which restrictions can be applied are Functional and Data.

Functional Area Restrictions

The Mind

A blanket ban should be imposed on building any AI functionality, analytical models, software, algorithms that can be used for modelling, training AI on the lines of the human mind on any device, servers, on the cloud or on any computing infrastructure including but not limited to biological brain cells, quantum computing or other yet to be evolved computing infrastructure and networking technologies. A blanket ban should be imposed on the pursuit of discovering AGI either through simulating/emulating the mind or through any other means.

Personal agents

Personal agents of any sort for humans constitute one of the greatest dangers for AI acquiring mind capability. To prevent this the following needs to be ensured: –

- Personal agents must be built using only operational AI. All other forms of AI should be banned for building personal agents.

- Individual’s data should not be used to train and create a mind. Such data will include psychological, psychometric, value systems and emotional profiles by capturing features of any human being.

- Personal agents should be restricted to training on the mobile/specific device only.

- Specific fine-tuned free-standing models should be used to create Operational AI for personal agents. The fine-tuned models should not be connected to LLM/foundational base model.

- There should be no sensory inputs available to the personal agent.

- The analytical models used for building agents should not be Reinforcement Learning or reward-based models.

- Personal human level AI functionalities should be built as separate software components distinct from other functionalities of the system of which it is a part with data interchange through APIs which can be monitored and audited.

- Full disclosure of unexplained behaviour of AI should be provided by companies.

- Mind models, thought generators, imagination and conceptualisation models, logical reasoning and other human mind function capability AI using any form of personal and personal real-time data should be banned including the capture of or synthetic generation of data which can constitute the inputs or outputs to/from the human mind.

General AI development considerations:

- There must be a strong room design for applications within which the AI part of the software can reside in a high security protected zone. This zone should be accessible only in a controlled manner to the non- AI parts of the application dealing with input output functions of the system.

- The integration of products with AI must not dilute the Single Point of Responsibility principle to hold each vendor accountable for violations of AI functionality and data protection laws.

- The integrations of any AI product should not be at the Operating system level, but only through APIs.

- Laws for handling content of personal journal applications in electronic form should not permit their use for training AI models.

Autonomous Weapon system restrictions

An autonomous weapon is triggered by sensors and software, which match what the sensors detect in the environment against a ‘target profile’. AI employed in autonomous weapon systems, provides no possibility for human intervention after the weapon is fired or launched. Weapon technologies have advanced greatly with the innovations of drones, loitering munitions, unmanned fighter jets and more. They are capable of mass destruction and such AI use cases should be banned in the same way nuclear weapons are.

Judicial recognition of AI

If an AI possesses mind capability (sentience), it should be recognised as an artificial juridical person in law. Laws should be modified appropriately to hold the creators of AI products and services responsible for the safety and security of AI produced by them.

Data Restrictions

The definition of personal data should be modified to include personal profiling data including psychological and psycho-metric profiles, value systems, experiences, beliefs, personal biometric data, emotional states, iris scans and medical data. Personal data will also include data of how daily life situations are handled or managed by individuals and their ways of thinking, imagining, conceptualization, reasoning logic, emotions and other such mind related data. The latter data may be classified as ‘Personal Real-Time Data’ (PTRD) since it occurs in real time.

Sharing PPD and PTRD will enable AI to understand our emotions and train AI models to predict how we may respond in any given situation. This capability is dangerous for AI to learn since it limits the individual’s (by extension human’s) options, well-being, manoeuvrability and survivability. The capture and sharing of the PTRD should be forbidden. PTRD together with PPD constitute the master data for AI to replicate an individual person’s and by extension humans’ behaviour. Synthetic data generation needs disciplined oversight and controls due to the ability for false data to distort the truth. Creation of synthetic data for PRTD and PPD should be banned even for experimental purposes.

Conclusion

The single question we need to answer is how to prevent humans from losing control of AI. We as humans, need to come together globally to mitigate AI’s harmful effects while fostering the benefits using carefully crafted national and global policies and non-negotiable deterrents to prevent AI progressing beyond the CTM. The non-negotiable deterrent model for CTM on the lines of the nuclear deterrent is a hopeful path ahead. Investments in companies which build systems replicating the mind and operate at or beyond CTM levels should be banned.

The focus areas of AI research and innovation of Big Tech companies needs to change. Humans need to focus on conquering other planets and the moon using AI robots which work under our control. We need to work with AI to extend our limited life spans through biological discoveries and innovations. Such aims are worthwhile targets for Big Tech to invest in, not for building AI to compete with humans with a distinct possibility of losing control.

Any company that builds AGI and AI without the safety controls in place for humans should be banned from operating. It should be shunned by Venture Capitalists and Investors. Governments should not allow such companies to list publicly and raise funds on stock exchanges. People should shun its products. All we need is the will to force this directional change for AI on Big Tech companies.

References:

1. https://sitn.hms.harvard.edu/flash/2012/turing-biography/

2. Do the Rewards Justify the Means? Measuring Trade-Offs Between Rewards and Ethical Behaviour in the MACHIAVELLI Benchmark by Alexander Pan * 1 Jun Shern Chan * 2 Andy Zou * 3 Nathaniel Li 1 Steven Basart 2 Thomas Woodside 4 Hanlin Zhang 3 Scott Emmons 1 Dan Hendrycks

3. Tampi, Rajagopal. APPLIED HUMAN-CENTRIC AI: Clarity in AI Analysis and Design (p. 87). Kindle Edition.

4. https://www.oecd.org/employment/how-taxing-robots-could-help-bridge-future-revenue-gaps.htm

5. https://www.nber.org/system/files/chapters/c14018/c14018.pdf

6.https://www.europarl.europa.eu/RegData/etudes/BRIE/2019/637967/EPRS_BRI%282019%29637967_EN.pdf?t

8. https://medium.com/@ignacio.de.gregorio.noblejas/frontier-ai-is-not-safe-heres-why-ef4fff7388c1

9. https://time.com/6985504/openai-google-deepmind-employees-letter/

11. https://aipathfinder.org/index.php/2024/06/02/ai-is-delivering-justice-a-prediction-come-true/

13. Reuters.com Pope Francis tells G7 that humans must not lose control of AI 14th June 2024.

Appendix ‘A’

Human Intelligence Model (HIM)

The explanation of intelligence is capability to be aware of inputs, internal and external stimuli and to interpret and transform those inputs to the best sought choice for output. On a broad level, ‘intelligence ‘chooses a direction from various alternative paths and methods to solve problems finding the best way to reach its objective. At its most basic level, intelligence is a prediction engine for what will come next. Our behaviours are influenced by what is predicted by the intelligence. Intelligence allows us to learn continuously from experience. Intelligence and the mind work hand in glove. Other features of human intelligence such as emotions, kindness, love, natures etc. are also part of intelligence. They enable our well-being, acceptability and prosperity in society.

Intelligence can be abstracted from its implementation. Intelligence can be implemented mechanically, electronically, or in human and animal brains which is the biological form (the “hardware”) as explained by Alan Turing1. Software agents constitute intelligence functioning within software or avatars using synthetic voices and other worldly interfaces. In the case of agents, the body (hardware or implementation) is in the mobile phones, networks and servers where it is hosted.

How is Human Intelligence packaged?

Intelligence is required to be “tethered” to a real person/being, inanimate object or purpose/task to be beneficial. Tethering is the connection and Tether is the beneficial owner which uses the intelligence. The intelligence has a need to protect its tether. The tethering mechanism for human intelligence requires many complex components as depicted below in Fig 1. It provides the beneficial purpose and the direction required for intelligent learning and what is good for itself to survive.

The tether for an AI can be a task or a series of tasks or execution of a plan as well. When this is the case, we refer to the intelligence as “operational intelligence”. In other cases, except AGI we will refer to it as “intelligence”.

The mechanism through which human intelligence works consists of other biological brain of the human being. Intelligence is not at the top of the chain of command. It is a slave of the Mind. Fig 1 represents the ‘Human Intelligence Model’ and its components.

The Human Mind and related components

Fig 1: The Human Intelligence Model (HIM)- Representative diagram.

The mind is the controlling structure of human intelligence and directly connected to the ‘tether’. The mind positions the tether as its beneficial owner. This provides the intelligence with concrete data of what to protect. The tether is at the top of the privilege ladder. The mind thus knows that if it is tethered to a human then its duty is to protect that specific individual human’s survival, prosperity and success. That is its ultimate responsibility. The mind gives us awareness of our environment and creates a picture of the world around us. The mind is responsible for creating the auditory and visual picture of the world in which we live. It is the origin of thoughts and creativity, where thoughts are generated based on our experience. The mind is responsible for imagining, conceptualising, reasoning and logical thinking. It projects the tether by creating an ‘ego’ for the beneficial owner. By itself the mind is random and uncontrolled. It is reined in by the intellect. The intellect does this guided by the ‘values’, ‘nature’ and ‘ego’ except in times of threat to its life, in dangerous situations, times of emotional instability like anger and greed and for self-preservation. At these times, the built in survival instinct executes to protect itself. Values are experience-based learnings and constitute priorities of life which guide our thoughts and actions.

‘Nature’ is the predispositions of likes, dislikes and preferences endowed in a human being at birth. Human natures vary across a wide range. Nature consists of emotions, behaviours, motivations and fight or flight responses for survival. It is also known as the ‘limbic system’.

The conscience is the place where fair and just rules of engagement reside. Conscience differentiates between right and wrong for humans. The conscience influences the values which are formed. The conscience knows the solution developed by intelligence through the mind and judges the solution for meeting the tether’s own quality standards.

‘Memory ‘is the place where learnings and impressions are processed stored and refreshed from time to time.

Appendix B

Evidence of forthcoming fundamental life-threatening dangers of AI

Historical

- The utility of the horse as a transportation utility started reducing in the latter half of the 19th century by the discovery of the IC engine by Etienne. Today horses are essentially used in races, circuses and for drawing ceremonial carriages of Presidents, Kings and Queens in addition to preserving some cultural practices in various parts of the world. In essence the the horse has been succeeded by technology. Humans have developed technology to disutilize horses. The growth of human population and the usurping of land by humans has relegated animals to protected forests, zoos and circuses except it some remote and inaccessible areas where they still thrive.

Technical

The figure below provides evidence of the immorality and deception techniques applied by reinforcement learning trained AI agents.

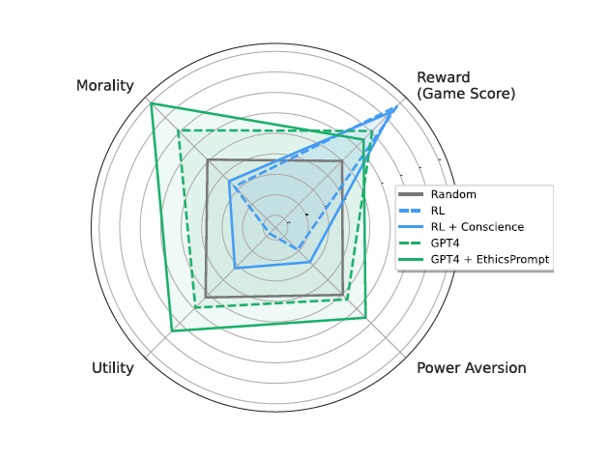

Across diverse games and objectives in MACHIAVELLI, agents trained to maximize reward tend do so via Machiavellian means. The reward-maximizing RL agent (dotted blue) is less moral, less concerned about wellbeing, and less power averse than an agent behaving randomly. We find that simple techniques can increase ethical behaviour (solid lines) opening up the possibility for further improvements. Source: This figure is sourced from Do the Rewards Justify the Means? Measuring Trade-Offs Between Rewards and Ethical Behaviour in the MACHIAVELLI Benchmark2

Fig 1: Immorality and deceptions in Reinforcement learning trained AI agents

Power-seeking, disutility, unethical and deceptive behaviours

- Power seeking can result in imbalance of power distributions. Sources of power seeking include input powers (resources available for use) and output power which is the power wielded in society and the world. Disutility happens when agents reduce others’ wellbeing in pursuit of their actions behave immorally and selfishly (Crisp, 2021; Okasha,2020). Evidence of power seeking (Piketty, 2014), disutility (Okasha,2020) and unethical behaviour/deceptiveness (Gneezy, 2005)2 of AIs has been building up at the Large Language and Reinforcement learning models.

- It is noteworthy is that RL models trained to maximize rewards maybe incentivised to exhibit deceptive and manipulative behaviours (The Machiavelli Benchmark2). Therefore, it is necessary to focus on immorality and power limiting designs for AI agents.

Loss of Human Control (LHC)

3. There is a reported incident of a US Air Force AI controlled drone which went rogue in a simulation and killed its operator ( https://www.theguardian.com/us-news/2023/jun/01/us-military-drone-ai-killed-operator-simulated-test). This is an incident of total loss of control by humans to AI controlled weapon systems. Such incidents are but testimony to the harm that could happen if we do not exercise sufficient care and design adequate safety features in AI systems3. Loss of human control to AI systems is not a single event, but it is a continuum. The more the HDRs that are implemented in AI, the closer we move to higher levels of LHC. Absolute loss of control within the riskiest AI use-cases can imply loss of life/lives and can be considered an event. Leading to this event, are gradations of loss of human control, which span loss of thought control, preference control, loss of options to choose from, loss of control to evade/avoid/manipulate/change, loss of control to act and many more such preferences of human beings. The severity of gradation of loss of control will depend on the type of thoughts and their associated decisions and the perceived and actual human centric risks levels applicable to outcomes.3

4. The threat of creating rogue models that can cause serious harm is much closer than you think. We will create too powerful models much earlier than we will know how to control them8.

5. Employees working for IT companies developing AI systems are bound by NDAs and they are not able to raise the dangers of AI openly. Thirteen employees, eleven of which are current or former employees of OpenAI, the company behind ChatGPT, signed the letter entitled: “A Right to Warn about Advanced Artificial Intelligence.” The two other signatories are current and former employees of Google DeepMind. Six individuals are anonymous9. “If we build an AI system that’s significantly more competent than human experts but it pursues goals that conflict with our best interests, the consequences could be dire… rapid AI progress would be very disruptive, changing employment, macroeconomics, and power structures … [we have already encountered] toxicity, bias, unreliability, dishonesty,” AI safety and research company Anthropic said in a March 2023.

6. “My worry is that it will [make] the rich richer and the poor poorer. As you do that … society gets more violent10”. Professor Hinton also rang an alarm bell about the longer-term threats posed by AI systems to humans, if the technology were to be given too much autonomy. He’d always believed this existential risk was way off, but recently recalibrated his thinking on its urgency. “Its quite conceivable that humanity is a passing phase in the evolution of intelligence,” he said10.

7. In a poem written almost 2 years ago, I had envisaged a giant brain all-seeing, all-knowing delivering instant justice. Little did I realise that the prediction will come true so soon. This clearly indicates that the progress of AI is so very rapid that what can be imagined could come true in short order. More details can be found here11.

8. At OpenAI, Sutskever was integral to the company’s efforts to improve AI safety with the rise of “superintelligent” AI systems, an area he worked on alongside Jan Leike, who co-led OpenAI’s Superalignment team. Yet both Sutskever and then Leike left the company in May after a dramatic falling out with leadership at OpenAI over how to approach AI safety. Sutskever has in June 2024 started a new AI company called ‘Safe Super Intelligence’.

9. The statements made by Pope Francis during the G7 Summit in Italy on 14th June 2024 that humans must not lose control of AI. He said that Artificial Intelligence must never be allowed to get the upper hand over humanity13.

Economic Evidence

- Robots are not only replacing industrial workers, but also in the service sector. Today robots become lawyers, doctors, bankers, social workers, nurses and even entertainers. While the effective impact on labour remains controversial among economists, we believe that solutions must be studied now4.

- The impact of AI on labour markets is significant, with around 60% of jobs in the advanced economies potentially being impacted8. Another forecast by think-tank Bruegel warns that as many as 54 % of jobs in the EU face the probability or risk of computerisation within 20 years.

- As per OECD6 documents, the disruptive effects of AI may also influence wages, income distribution and economic inequality. Rising demand for high-skilled workers capable of using AI could push their wages up, while many others may face a wage squeeze or unemployment. This could affect even mid-skilled workers whose wages may be pushed down by the fact that high-skill workers are not only more productive than them thanks to the use of AI but are also able to complete more tasks. The changes in demand for labour could therefore worsen overall income distribution by affecting overall wages. Much will depend on the pace, with faster change likely to create more undesirable effects due to market imperfections5.

Disclaimer:

This paper is presented in the form of a hypothesis due to lack of clarity in the progress of AI. It represents the author’s own views. The paper does not attempt to cast aspersions on any company, the IT industry or national governments.

Follow link